Let's suppose that we have the same dynamic model we presented before - that is, the Lorentz system of differential equations. Remember?

In order to perform a fitting we need to define an objective function of sort: this will then be minimised.

Now, the two most important pieces are put together. What we still need is some data to fit, and a starting point for the fitting. We get those in the next few lines, followed by the actual command to fit the guess to our actual target (note: target is explicitly called within the Objective function itself, in the modCost() line).

Now we're in business! After running the code above, the system suggests a value of coefficients fairly close to the target's owns. -3.6, -5.2, 27.7, compared to -3, -5, 30.

let's have a look at the solutions: in blue, our target - in red, our initial guess, in green, our final fit (bullet).

Ok, the fit isn't that good if you look at it. But it's better than nothing... If you want to try and get closer just increase the number of iterations allowed to the minimiser. But it isn't really necessary - we're going to move onto something more interesting.

How do we know that the parameters we're fitting are sensible? Yes, they certainly look so in this case, but in real life we have no clue of what the real numbers may be, or we wouldn't be fitting to them in first place. Perhaps the trajectory we're fitting against is just too short, and doesn't have enough information to distinguish between an infinite number of radically different solutions, which fit well locally but diverge wildly later on... Pretty much assured to be the case with Lorentz's system, I'd say...

So, we run a sensitivity analysis on the system - the authors of the FME package have made this very easy for us:

the result of this is then fed to a collinearity analysys function, which - at least that's how I understand it, in 'non-statitiscian' speaks - checks that simultaneous changes in (a subset of) your parameters do not affect the model in a too similar (collinear) way.

So, here's your result. If you print the 'Coll' variable, you'll see a table-like frame where a collinearity value is attached to each combination of parameters... Like this:

Now, according to the authors of the package, values below 20 say that it's OK to trust a fitting of that particular combination. value above 20, are a no-no...

This table is easy to look at but I thought that transforming it into a graph may come in handy, particularly for cases several parameters and combinations have to be explored. First off we 'melt' our Collinearity table using the reshape package:

As you may see, we also create some categorical (i.e. factor() variables which make or easy coloring of the table. Then, we can finally plot:

And here's the final result:

This graph suggests, like the table from which is derived, that all parameters can be fit together (with these specific data), and a reliable fit can be found...

If only my real-life example were so easy...

Homeworks: Try adding some random noise to the XYZ values of the target, and see if the collinearity analysis changes. I'll try to update this post with a tentative walkthrough in the next few days. For now, enjoy!

Sunday, July 8, 2012

Friday, June 22, 2012

Plotting non-overlapping circles...

It's holiday today in Sweden, so happy Midsummer to everyone!!! (I know, it's delayed)

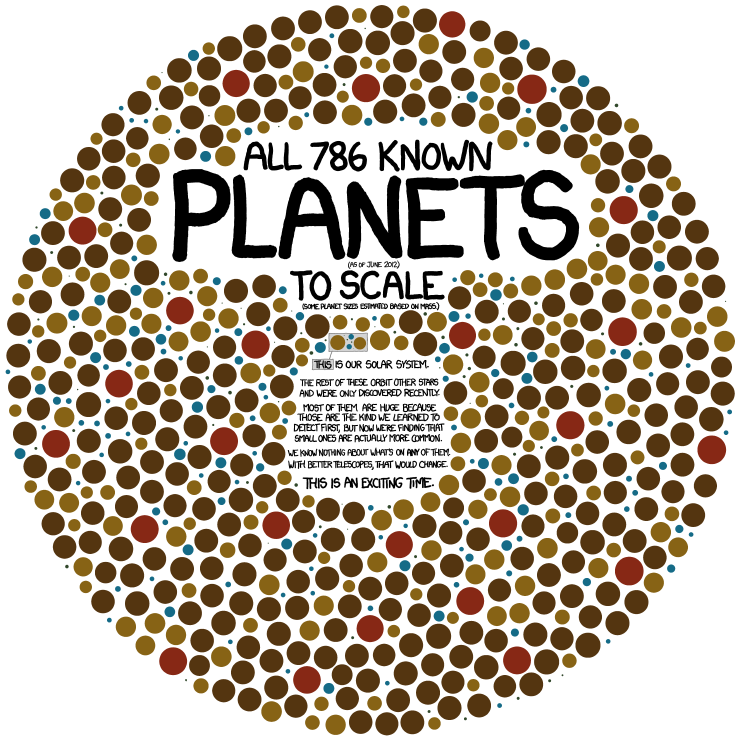

No work for me, so I checked up the latest XKCD:

Gorgeous, right? So I decided to see if I could make something similar - but being lazy, I didn't feel like drawing all circles one by one... I decided to try and have R do it for me instead.

The trick is in drawing the n-th circle avoiding to plot over the previous (n-1). The rest is eye-candy.

So, first of all, you need a data set: you can get the original one from (I presume) exoplanets.eu, if you like. At first I tried but then I just decided to use a randomly generated set:

Ok, so now we have a bunch of data which looks like this:

Never mind my dumb attempt - here's a better solution in a blog post I wasn't aware of: http://www.r-bloggers.com/circle-packing-with-r/

No work for me, so I checked up the latest XKCD:

Gorgeous, right? So I decided to see if I could make something similar - but being lazy, I didn't feel like drawing all circles one by one... I decided to try and have R do it for me instead.

The trick is in drawing the n-th circle avoiding to plot over the previous (n-1). The rest is eye-candy.

So, first of all, you need a data set: you can get the original one from (I presume) exoplanets.eu, if you like. At first I tried but then I just decided to use a randomly generated set:

N<-650 # number of exoplanetsI redorder the exoplanets in order of mass, so that they'll be printed from the largest to the samllest... it makes it easier later on to generate the plot, a bit like it's easier filling a bucket with rocks, then with gravel, then with sand, than doing it the other way around...

R<-1000 # radius of a 2D-circle where you'll want to plot them

MinMass<-5; # minimum mass for the planets

MaxMass<-100; # maximum mass for the planets

P<-6 # power exponent for the mass distribution

Q<-3 # this exponent scales the mass to radii...

margin<-mean(c(MinMass,MaxMass)); # a minimum separation you want to mantain between planets when plotted

# a function to name each planet with a random string of letters (optional)

getRandString<-function(len=8) return(paste(sample(c(LETTERS,letters),len,replace=TRUE),collapse=''))

exoplanets<-list(Mass=sort(runif(N, MinMass^(1/P), MaxMass^(1/P))^P, decreasing=TRUE), Name=replicate(n=N, getRandString()))

Ok, so now we have a bunch of data which looks like this:

> exoplanets$Name[1:5]

[1] "lRnrqjcQ" "YuhcqSTO" "MmrAZvzi" "ROMpQwlZ" "epnBwmtj"

> exoplanets$Mass[1:5]

[1] 99.98406 99.43530 99.26070 98.80203 98.50710

next we define a matrix where to store the x and y values needed for plotting, together with a function to generate those values:

mtrx<-matrix(nrow=N, ncol=2); colnames(mtrx)<-c('x','y')

getxy_withinR<-function(R=10) {

r<-runif(1,0,R^2)^(1/2); theta<-runif(1,0,2*pi);

x<-r*cos(theta)

y<-r*sin(theta)

return(c(x,y))

}

as some of you may have spotted, the x/y values are generated within circle of a given radius, at an angle theta randomly chosen. Importantly, in order not to crowd the origin, I apply a 1/r^2 scaling to the coordinates being generated...

Now it's time to set up a function to check if a bubble is bumping on any other:

check_bounce <- function(x,y,rad,X,Y,RAD) {

distance<-sqrt((X-x)^2 + (Y-y)^2); # print (paste("distance is ",distance))

radsum<-RAD^(1/Q)+rad^(1/Q)+margin; # print(paste("radius sum is ",radsum))

return(sum(distance < radsum))

}

I just compute the distance from another (set of) bubble(s)... The function will soon be applied but first let's draw an empty plot:

lim<-R+MaxMass

plot(NULL, xlim=c(-lim,lim), ylim=c(-lim,lim))

Now it's the time to loop over all bubbles to generate the coordinates, check if they bump with others, and draw them in many colors...

for (n in 1:N) {

print(paste("placing",n, exoplanets$Name[n]))

# generate new coordinates

xy<-getxy_withinR(R); x<-xy[1]; y<-xy[2];

if (n!=1) {

tocheck<-(1:(n-1))

while(overlap<-check_bounce(x,y,exoplanets$Mass[n],mtrx[tocheck,'x'],mtrx[tocheck,'y'],exoplanets$Mass[tocheck]) > 0)

{

xy<-getxy_withinR(R); x<-xy[1]; y<-xy[2];

}

}

mtrx[n,'x']<-x; mtrx[n,'y']<-y;

# draw a circle once you know where

exoplanets$X[n]<-xy[1]; exoplanets$Y[n]<-xy[2];

draw.circle(x=exoplanets$X[n], y=exoplanets$Y[n], r=exoplanets$Mass[n]^(2/Q), col=rgb(runif(1,0,1),runif(1,0,1),runif(1,0,1),(0.5+n/(2*N))), border=rgb(runif(1,0,1),runif(1,0,1),runif(1,0,1),(0.5+n/(2*N))))

# Sys.sleep(0.03)

}

I draw the large ones first, and I keep them semi-transparent, decreasing the transparency as I go forward, in the hope that this will make it easier to spot new bubbles appearing when the plot is crowded... Judge yourself if it works:

I know, it doesn't really look like Randall's drawing at XKCD - it's tricky to compactify all the bubbles without overlapping them... and the dumb algo I came up with to check bumps doesn't scale very well...

I've toyed with the idea of making the bubbles into a dynamic system with some attraction and repulsion, letting it evolve to an equilibrium state... But I didn't want to get too bogged down with it... perhaps later...

For the moment, it's close enough. It's sunny outside and I deserve some cycling time. Source code is below:

Never mind my dumb attempt - here's a better solution in a blog post I wasn't aware of: http://www.r-bloggers.com/circle-packing-with-r/

Sunday, June 3, 2012

Coding a dynamic systems and controlling it via a graphical user interface

My work, in the past year, has consisted mostly of coding dynamic models in R, models which I will soon be exporting to a server-based R implementation, possibly thanks to rApache.

I ususally run my models through an input file where I specify all parameters needed, but for the end users, we felt it may be better to provide a graphical user interface where they could select just a few parameters, with the others defaulting to meaningful values.

Wioth this post I want to quickly illustrate all that's needed to put such a system together, exception made for the rApache part. I've not made any steps in that direction, yet.

So, let's start by defining a dynamic model, which we'll integrate using the deSolve package. We use the Lorentz system, which is far simpler than any of the models I actually work with, and produces much more beautiful and interesting graphics, too.

OK, if you run this you'll obtain a variable called 'out', which contains the X/Y/Z coordinates of your system at different time instants in the phase space. You can look at the values directly, but obviously plotting is a good option. Taking advantage of the 'multiplot' function defined in the Cookbook for R, we can write:

Which will generate the following picture:

I ususally run my models through an input file where I specify all parameters needed, but for the end users, we felt it may be better to provide a graphical user interface where they could select just a few parameters, with the others defaulting to meaningful values.

Wioth this post I want to quickly illustrate all that's needed to put such a system together, exception made for the rApache part. I've not made any steps in that direction, yet.

So, let's start by defining a dynamic model, which we'll integrate using the deSolve package. We use the Lorentz system, which is far simpler than any of the models I actually work with, and produces much more beautiful and interesting graphics, too.

OK, if you run this you'll obtain a variable called 'out', which contains the X/Y/Z coordinates of your system at different time instants in the phase space. You can look at the values directly, but obviously plotting is a good option. Taking advantage of the 'multiplot' function defined in the Cookbook for R, we can write:

Which will generate the following picture:

I did make use of the alpha channel to give some sense of depth to all pictures. I would love to plot a 3D version of the Lorenz Attractor in the fourth panel, lower right - however, I didn't want to get bogged down in defining a rotation / projection matrix.

Until now, there's no GUI - all this happens within the command line, or if you prefer a simple R script.

Unless, that is, you also define a gWidget which can actualy control your model, like this:

To draw this, you just need to type a few lines of code in your R script, plus some more functions to handle events (that is, you clicking the button or changing parameter values)

As a matter of fact, we can also embed the graphical output within the GUI window, either on the side of the controls, or in another tab. perhaps I'll update the post later on to reflect that.

Monday, May 28, 2012

Again on polar/star/pie charts

Haven't had much time to devote to new visualisations, mostly because work and baby have taken the precedence.

But I just wanted to take a few minutes to share the latest version of the script I showed last time.

It now saves to a pdf file, but that's not the largest change. I actually included one more slice which is obtained from the others with a formula (e.g. average, or geometrical mean, in this case the sum) and is used to rank the pies accordingly. The new value is represented as a white wedge on top of everything else, with its value pasted over it. The script wraps compounds and fit only a limited number per page, then moves on to the next one

There are a lot of things which may be changed, such as having the value as a bubble at the centre rather than a new wedge, to conserve the pies' proportionality... Or just showing the 'score' in a corner of the plot, with other info at the bottom... None of this is implemented as of yet, sorry. The code commented-out shows some graphics alternatives which I did try and set aside for the moment.

Code follows:

But I just wanted to take a few minutes to share the latest version of the script I showed last time.

It now saves to a pdf file, but that's not the largest change. I actually included one more slice which is obtained from the others with a formula (e.g. average, or geometrical mean, in this case the sum) and is used to rank the pies accordingly. The new value is represented as a white wedge on top of everything else, with its value pasted over it. The script wraps compounds and fit only a limited number per page, then moves on to the next one

There are a lot of things which may be changed, such as having the value as a bubble at the centre rather than a new wedge, to conserve the pies' proportionality... Or just showing the 'score' in a corner of the plot, with other info at the bottom... None of this is implemented as of yet, sorry. The code commented-out shows some graphics alternatives which I did try and set aside for the moment.

Code follows:

Wednesday, May 16, 2012

My take on polar bar (a.k.a. consultant's) charts

Once upon a time, when I was working at Johnson & Johnson (pharma branch), I was surrounded by a bunch of programmers working to develop (among other things) a nifty piece of software for internal use. Part of it was later released as freeware, called Vlaaivis. The main idea was to visualize each the compound's many data at once, with each property being represented by a slice within the pie. For each property, ti was possible to define an ideal value, or range, and values above or below that one would show up as incomplete slices of two shades of the same color... This way, the fuller the pie the better, or more 'dieal', the compound under exam would be.

You may find it still at its home, http://www.vlaaivis.com/.

As I moved on to different things, I still remembered this as a good way of visualising multifactorial data, especially when comparing several candidates (compounds, in my case). Inspired by a post on LearnR, I decided to start reimplementing something similar in R for use within our own group.

The most significant change from the LearnR post is that I added in a 'facets_grid()' call to the ggplot, so as to split the polar bar chart in the different compounds. Thanks to the faceting, all compounds are plotted on the same 'space' and are therefore immediately comparable.

The other, minor, change I made was to create the dataframe as a matrix, which is the way we usually store such kind of data, and use 'melt', from the package 'reshape', to convert it into a form suitable for plotting as bar chart (polar or not). I left in the comments an option for reading in the data from a text file...

Here's the dummy template I came up with:

And here's my output:

If, as I described previously, the bars were some kind of normalised score, such as the recently suggested druglikeness score, then the fuller the pie, the better looking the compound would be for a medicinal chemist.

I omitted the legend, since the variable names (a-e) is present in each plot (does anyone know how can I get rid of the 0.5 legend key? it comes from the alpha definition in the ggplot).

Two major things left to do:

I'll update this text and the code as I improve the visualization.

If you don't feel like messing around with code, you can always try and build a similar plot using deduceR:

http://www.r-statistics.com/2010/08/rose-plot-using-deducers-ggplot2-plot-builder/

Hope one or more of you find this useful!

You may find it still at its home, http://www.vlaaivis.com/.

As I moved on to different things, I still remembered this as a good way of visualising multifactorial data, especially when comparing several candidates (compounds, in my case). Inspired by a post on LearnR, I decided to start reimplementing something similar in R for use within our own group.

The most significant change from the LearnR post is that I added in a 'facets_grid()' call to the ggplot, so as to split the polar bar chart in the different compounds. Thanks to the faceting, all compounds are plotted on the same 'space' and are therefore immediately comparable.

The other, minor, change I made was to create the dataframe as a matrix, which is the way we usually store such kind of data, and use 'melt', from the package 'reshape', to convert it into a form suitable for plotting as bar chart (polar or not). I left in the comments an option for reading in the data from a text file...

Here's the dummy template I came up with:

And here's my output:

I omitted the legend, since the variable names (a-e) is present in each plot (

Two major things left to do:

- I would like to plot the compound in order of 'fullness' - a sort/order snippet is there in the code, and the new ordering survives the melting of the matrix - however, ggplot seems to rearrange the data according to some internal order... (Thanks to Christoph for fixing this)

- Right now, the code isn't suitable for too many compounds, since the facets_grid() will arrange them horizontally. I would be grateful if someone were to let me know how to automatically arrange them in a grid of a given maximum number of columns... I know how to do that when I explicitly create each plot, but then I loose the ease of comparison which comes from all compounds being plotted on the same scale... ( Thanks to Christoph for fixing this too)

I'll update this text and the code as I improve the visualization.

If you don't feel like messing around with code, you can always try and build a similar plot using deduceR:

http://www.r-statistics.com/2010/08/rose-plot-using-deducers-ggplot2-plot-builder/

Hope one or more of you find this useful!

Monday, May 14, 2012

Plotting data and distribution simultaneously (with ggplot2)

Ever wanted to see at a glance the distribution of your data across different axes? It happens often to me, and R allows to build a nice plot composition - This is my latest concoction. I used ggplot2 here, but equivalent graphics can be made using either base graphics, or lattice.

Well, I hope the code is clear - this time I commented it a bit more...

Saturday, May 12, 2012

My own version of bubble plot (part 1)

During one of my projects, I found myself in need of visualizing more than 3 dimensions at once. Three-dimensional graphs are not a good solution, usually - they will need to be properly oriented, for a start, ad that's tricky.

So, I started looking at bubble plots. The size of the bubble can show one property, as illustrated by the nice post at FlowingData - then you can show one more property defined by a color scale (continuous below, but nothing stops it from being categorical)

I decided to push it and have two properties: look at the example below - the redder the color, the higher the value on the property ApKUpt (or whatever you want). The greener, the higher ApVUpt. I moved the color legend to a square on the extreme right to achieve a better use of the available space.

I tried three colors but it turns out that it just doesn't work. Even when your eyes don't interpret every rgb triplet as a completely different color, the amount of redness, greeness or blueness is difficult to estimate. Also, it gets tricky to show the color grading in a legend... One has to resort to slices of the three-dimensional color space. See what I mean?

Of course, one can define an ad-hoc color scale, such as the one used below, vaguely inspired by the colors that Mathematica uses to paint its surfaces. Many thanks to my colleague Pär for teaching me how to define these kind of color scales, and much else.

Here follows the code for the one, two and three colors plot:It's messy and not at all clean - but it gets the job done. This routine is also dependent from several others which define colorscale and other accessory functions... feel free to drop me a line in the comments if you want the lot... Similar plots can be obtained with ggplot2 in much fewer lines, although right now I'm less expert at it so they're much less customised.

First Post: Welcome to this new blog!!!

It's been almost one years that I've started using R as my main programming/analysis tool.

I like the fact that so many beautiful graphics can be produced directly within R.

Although I often just use the basic functionalities, often my work pushes me to develop more complex visualisations which I'd like to share with others so that my efforts aren't wasted after I'm done using them.

Here I'll do my best to share, in the hope that they may be useful to someone, and that more expert users may point out ameliorations to the code, as well.

Later on I'll add this blog to the R-Bloggers feed so that I can contribute back to where I picked up so much inspiration.

Update - 15/05/2012 - I just added the feed to R-Bloggers, to celebrate I\'ll do my best to put out a nice pie chart. My take on the consultant charts!

It's been almost one years that I've started using R as my main programming/analysis tool.

I like the fact that so many beautiful graphics can be produced directly within R.

Although I often just use the basic functionalities, often my work pushes me to develop more complex visualisations which I'd like to share with others so that my efforts aren't wasted after I'm done using them.

Here I'll do my best to share, in the hope that they may be useful to someone, and that more expert users may point out ameliorations to the code, as well.

Later on I'll add this blog to the R-Bloggers feed so that I can contribute back to where I picked up so much inspiration.

Update - 15/05/2012 - I just added the feed to R-Bloggers, to celebrate I\'ll do my best to put out a nice pie chart. My take on the consultant charts!

Subscribe to:

Posts (Atom)